ICASSP 2026 URGENT Challenge

How to Participate

- Fill in the google form.

- If you are new to URGENT challenges, You will receive credentials for leaderboard.

- Otherwise, you will get notifications when approved for leaderboard submission.

- Check Track 1 (Universal Speech Enhancement) and Track 2 (Speech Quality Assessment) for rules, data and baseline.

- Log in and submit results to Track 1 and Track 2

Motivation

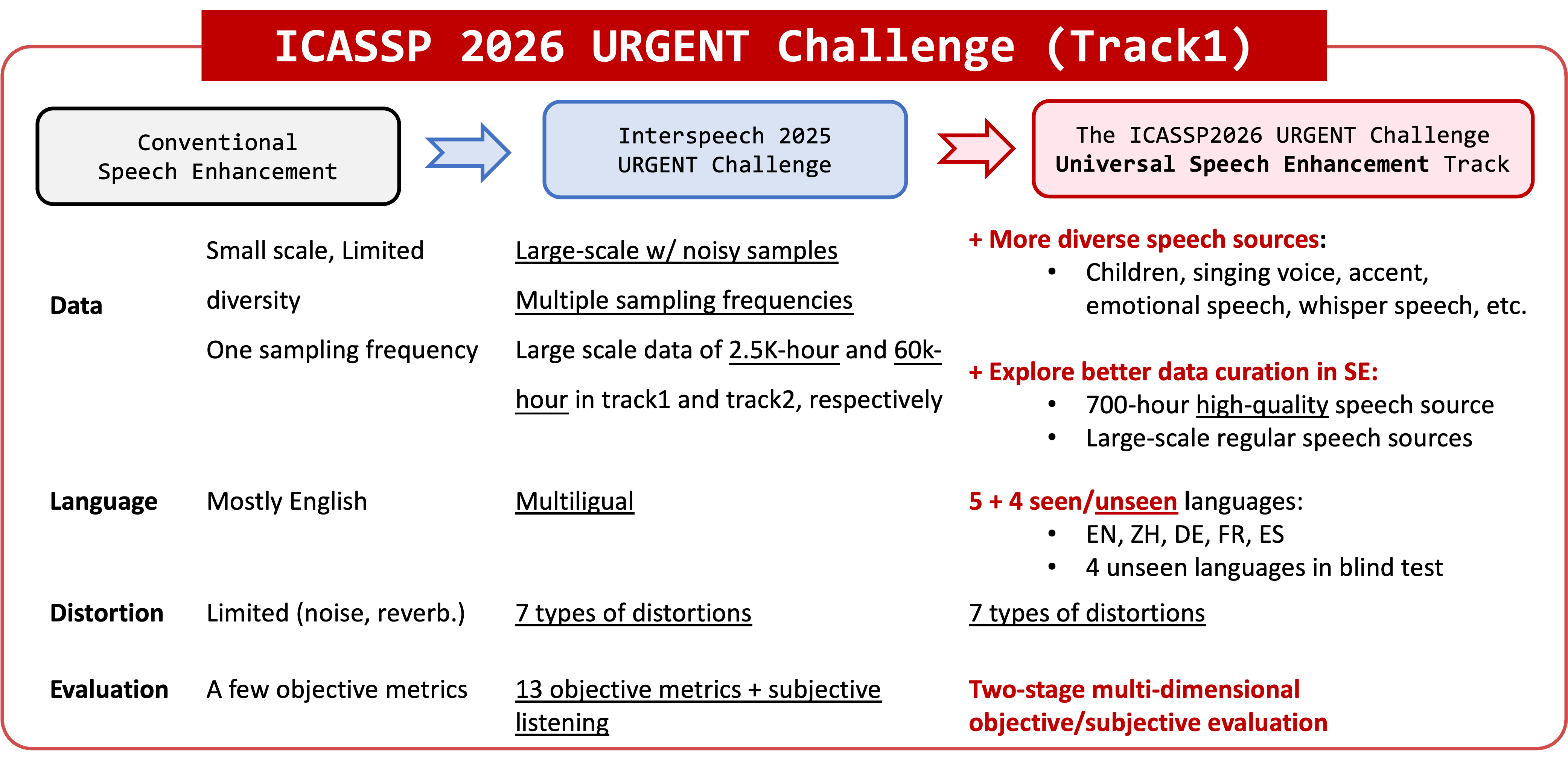

The ICASSP 2026 URGENT challenge (Universal, Robust and Generalizable speech EnhancemeNT) is a speech enhancement challenge held at ICASSP 2026 SP Grand Challenge. We aim to build universal speech enhancement models for unifying speech processing in a wide variety of conditions.

The ICASSP 2026 URGENT Challenge aims to further promote the research on Universal, Robust and Generalizable speech EnhancemeNT. This year’s challenge focuses on the following aspects:

-

Data Curation in Large-scale Datasets. Some recent studies reveal diminishing returns from scaling datasets for speech enhancement (SE)

, and data curation is crucial for training SE models with large-scale data . The challenge aims to draw people’s attention to this phenomenon, so as to make better use of large-scale data for speech enhancement. -

More Diverse Speech Sources. While prior URGENT challenges addressed speech diversity through acoustics and language, the third challenge expands to critical understudied dimensions like age, accents, whispered/singing voices, and emotional speech.

-

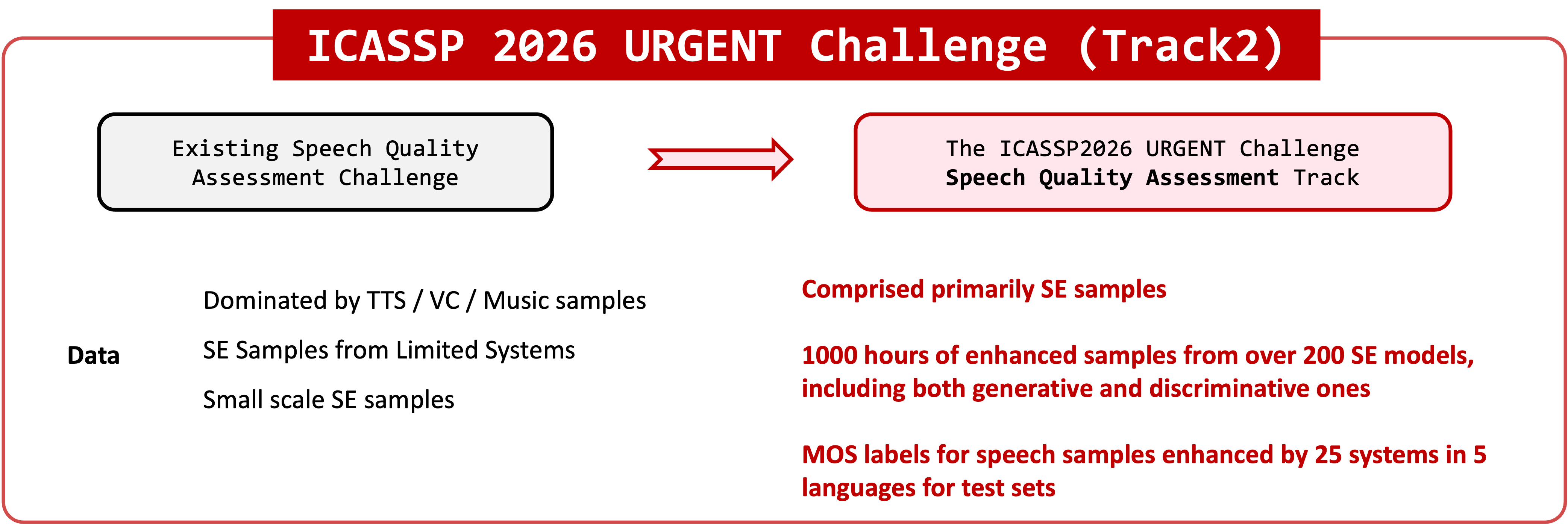

Speech Quality Assessment (SQA) Track Based on Human Ratings. The ICASSP 2026 URGENT Challenge introduces a new track for perceptual quality prediction models using human ratings, featuring large-scale, distortion-diverse data tailored for SE—unlike existing SQA challenges.

Task Introduction

Track 1: Speech Enhancement

The task of this challenge is to build a single speech enhancement system to adaptively handle input speech with different distortions (corresponding to different SE subtasks), different domains (e.g., language, age, accents, emotion), different input formats (e.g., sampling frequencies) in different acoustic environments (e.g., noise and reverberation).

The large-scale training data will consist of several public corpora of speech, noise, and RIRs. We provide a 700-hour curated training set as a baseline. And we encourage participants to explore advanced data curation techniques to utilize a large-scale noisy training dataset. Please check the Track1 page for more details.

Track 2: Speech Quality Assessment

The task of this track is to build a robust, generalizable speech quality assessment (SQA) model that predicts mean opinion scores (MOS) for enhanced speech. Systems will be evaluated using correlation and error metrics — MSE (Mean Squared Error), LCC (linear correlation coefficient), SRCC (Spearman’s rank correlation coefficient), and KTAU (Kendall’s tau) — between the predicted MOS and the provided MOS labels. Data preparation scripts and baseline codes are available at https://github.com/urgent-challenge/urgent2026_challenge_track2. We allow the use of any public data and publicly available models. See the Track2 page for full details.

Contact

-

We have a Slack channel for real-time communication.

-

For any further questions, drop an email at urgent.challenge@gmail.com.