FAQ

Below we summarize some frequently asked questions (FAQ) about the URGENT Challenge. If you have any other questions, please feel free to contact us.

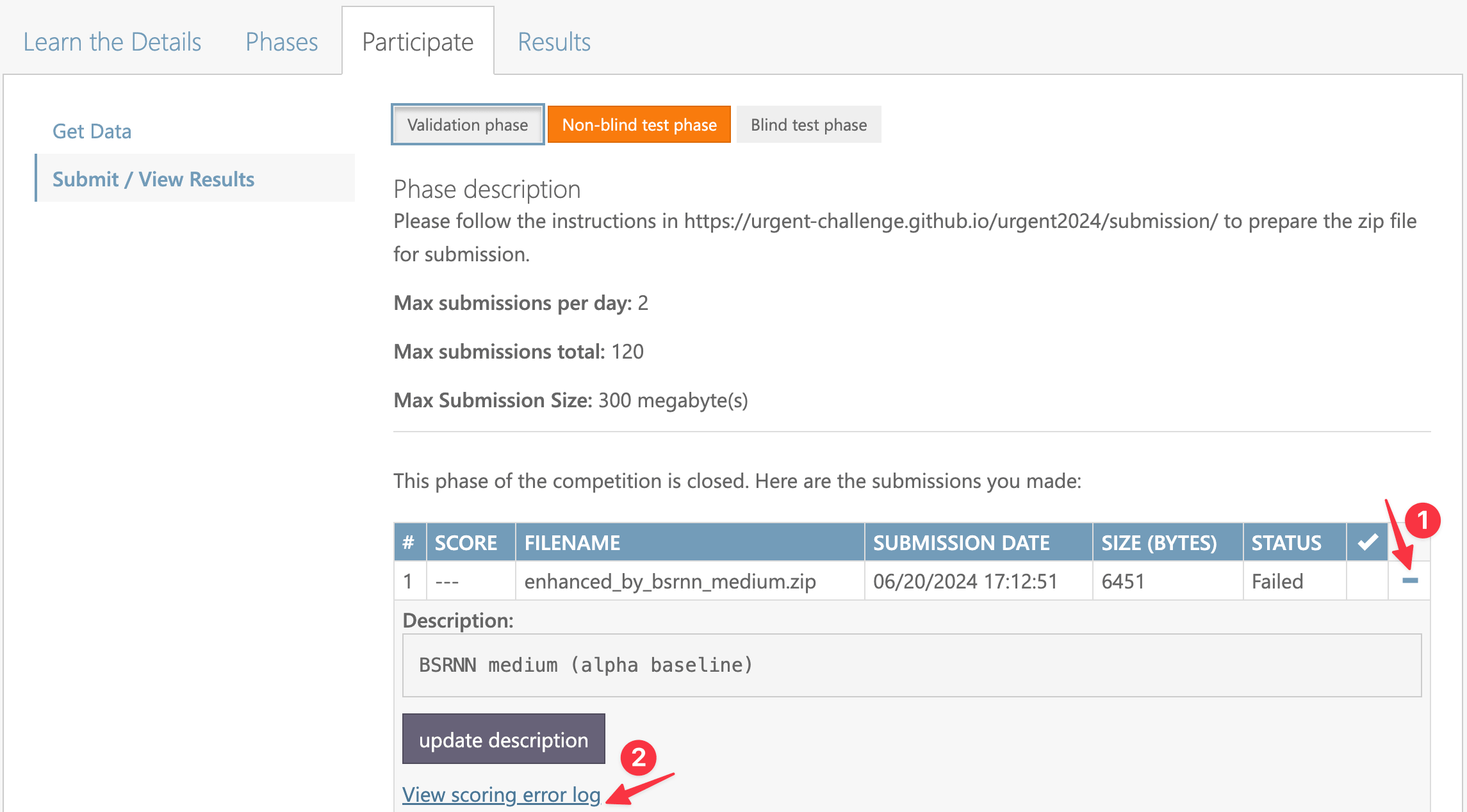

1. How can I check why my submission failed in the leaderboard?

You could go to

Participate → Submit / View Results and unfold the corresponding failed submission. Then click the text View scoring error log to download the error message file. It should display the detailed information about the failure.

2. What does the error message from the leaderboard mean?

Message 1:

data_pairs.append((uid, refs[uid], audio_path))

KeyError: 'fileid_10009'

Message 2:

assert ref.shape == inf.shape, (ref.shape, inf.shape)

AssertionError: ((315934,), (315936,))

Message 3:

RuntimeError: Error : flac decoder lost sync.

Message 4:

RuntimeError: Error : unknown error in flac decoder.

Message 5:

slurmstepd: error: *** JOB 24880048 ON r288 CANCELLED AT 2024-08-02T04:17:40 DUE TO TIME LIMIT ***

Timeout: The evaluation server is busy. Please try to resubmit later.

Message 6:

RuntimeError: CUDA error: uncorrectable ECC error encountered

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

3. I would like to speecd up calculate_wer.py

calculate_wer.py takes around 30~40 minutes (depending on the environment) to evaluate 1000 samples using a single GPU.

It takes some time since it does beam search in decoding.

To make it faster, you can skip the beam search by setting BEAMSIZE to 1 here.

Note that BEAMSIZE is set to 5 in the leaderboard evaluation.

If you would like to check the consistency of the scores between your local and the leaderbord, BEAMSIZE should be set to 5.

4. How long is the maximum duration of the speech in the test set?

The maximum duration will be around 15 seconds. Please note that this number may change.

5. Why is not my leaderboard registration approved?

The leaderboard registration is usually approved at least within a day. If your application is not approved for more than a day, please check if you have submitted the Google Form. We do not approve the registration until we receive it. If you have done it but have not gotten the approval yet, please reach out to the organizers.