Data

The blind test set is available here

Brief data description

Training/validation data

The training and validation data are both simulated by using several public speech/noise/rir corpora (see the table below for more details). We provide the data preparation script which automatically downloads and pre-processes those data.

There are two types of validation data. One is automatically generated during data preparation and the other is provided by the orgnizeres

- Unofficial validation set: participants can generate their own validation set using the validation subset of the official challenge datasets to choose the best model.

- Official validation set: we organizeres provide the validation set for the leaderboard submission. It contains 1000 samples and all of them are synthetic data. The maximum duration per utterance is 15 seconds. Unlike the unofficial one, we manually picked up clean speeches for the noisy corpora (i.e., CommonVoice) so it better reflectes the enhancement quality. Noisy speech, Clean speech, and metadata are available.

Non-blind test set

The non-blind test set are prepared in a similar way as the official validation set but there are several differences:

- The test split of the official challenge datasets were used.

- Several unseen noise and rir were also used when simulating the non-blind test set.

- Sampling rates are almost equally distributed (there are ~1000/7 data for each of 8k, 16k, 22.05k, 24k, 32k, 44.1k, and 48kHz). We downsampled some data to achieve this.

The noisy and clean speeches as well as the metadata are available. After the non-blind test phase ends, clean speech and metadata will be available.

Blind test set

The blind test set, which will be used for the final ranking, is available here.

To evaluate the universality, robustness, and generalizability of the submitted systems as outlined in the theme of this challenge, the blind test set is primarily from domains other than the training set:

- consist of 50% real-recorded data (without audio ground truth) and 50% synthetic data,

- primarily be derived from from unseen corpora/datasets,

- include one unseen language in addition to five languages present in the training data,

- be distorted by some unseen distortions in addition to those used in training, and

- contain 150 samples for each language, amounting to 900 samples in total.

Note that the evaluation procedure during the blind testing phase differs from that in the validation/non-blind test phase in the following ways:

- Two additional metrics (POLQA and MOS) will be included. (As previously announced, only the English subset will be used for the MOS evaluation due to the short evaluation period.)

- Only a subset of metrics (DNSMOS, NISQA, UTMOS, MOS, and CER if transcription is available) will be considered for the real-recorded data.

Detailed data description

The training and validation data are both simulated based on the following source data. Note that the validation set made by the provided script is different from the official validation set used in the leaderboard, although the data source and the type of distortions do not change.

The challenge has two tracks:

- First track: We limit the duration of MLS and CommonVoice, resulting in ~2.5k hours of speech.

- Second track: We do not limit the duration of MLS and CommonVoice datasets, resulting in ~60k hours of speech.

| Type | Corpus | Condition | Sampling Frequency (kHz) | Duration of track 1 (track2) | License |

|---|---|---|---|---|---|

| Speech | LibriVox data from DNS5 challenge | Audiobook | 8~48 | ~350 h | CC BY 4.0 |

| LibriTTS reading speech | Audiobook | 8~24 | ~200 h | CC BY 4.0 | |

| VCTK reading speech | Newspaper, etc. | 48 | ~80 h | ODC-BY | |

| WSJ reading speech | WSJ news | 16 | ~85 h | LDC User Agreement | |

| EARS speech | Studio recording | 48 | ~100 h | CC-NC 4.0 | |

| Multilingual Librispeech (de, en, es, fr) |

Audiobook | 8~48 | ~450 (48600) h | CC0 | |

| CommonVoice 19.0 (de, en, es, fr, zh-CN) | Crowd-sourced voices | 8~48 | ~1300 (9500) h | CC0 | |

| Noise | Audioset+FreeSound noise in DNS5 challenge | Crowd-sourced + Youtube | 8~48 | ~180 h | CC BY 4.0 |

| WHAM! noise | 4 Urban environments | 48 | ~70 h | CC BY-NC 4.0 | |

| FSD50K (human voice filtered) | Crowd-sourced | 8~48 | ~100 h | CC0, CC-BY, CC-BY-NC, CC Sampling+ | |

| Free Music Archive (medium) | Free Music Archive (directed by WFMU) | 8~44.1 | ~200 h | CC | |

| Wind noise simulated by participants | - | any | - | - | |

| RIR | Simulated RIRs from DNS5 challenge | SLR28 | 48 | ~60k samples | CC BY 4.0 |

| RIRs simulated by participants | - | any</a> | - | - |

For participants who need access to the WSJ data, please reach out to the organizers (urgent.challenge@gmail.com) for a temporary license supported by LDC. Please include your name, organization/affiliation, and the username used in the leaderboard in the email for a smooth procedure. Note that we do not accept the request unless you have registered to the leaderboard.

We allow participants to simulate their own RIRs using existing tools

For example, RIR-Generator, pyroomacoustics, gpuRIR, and so on. for generating the training data. The participants can also propose publicly available real recorded RIRs to be included in the above data list during the grace period (SeeTimeline). Note: If participants used additional RIRs to train their model, the related information should be provided in the README.yaml file in the submission. Check the template for more information.We allow participants to simulate wind noise using some tools such as SC-Wind-Noise-Generator. In default, the simulation script in our repository simulates 200 and 100 wind noises for training and validation for each sampling frequency. The configuration can be easily changed in wind_noise_simulation_train.yaml and wind_noise_simulation_validation.yaml

Pre-processing

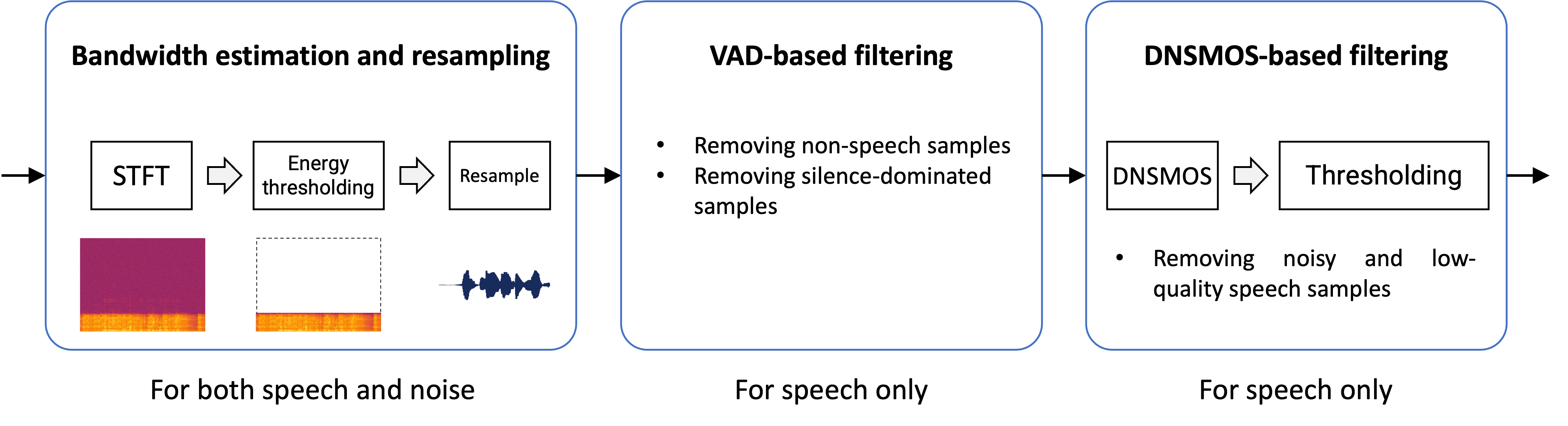

Before simulation, some speech and noise data are pre-processed to filter out low-quality samples and to detect the true sampling frequency (SF).

Specifically, we applied data filtering (described below) to LibriVox and CommonVoice (track1)

The pre-processing procedure includes:

- We first estimate the effective bandwidth of each speech and noise sample based on the energy thresholding algorithm proposed in

https://github.com/urgent-challenge/urgent2025_challenge/blob/main/utils/estimate_audio_bandwidth.py . This is critical for our proposed method to successfully handle data with different SFs. Then, we resample each speech and noise sample accordingly to the best matching SF, which is defined as the lowest SF among {8, 16, 22.05, 24, 32, 44.1, 48} kHz that can fully cover the estimated effective bandwidth. - A voice activity detection (VAD) algorithm

https://github.com/urgent-challenge/urgent2025_challenge/blob/main/utils/filter_via_vad.py is further used to detect “bad” speech samples that are actually non-speech or mostly silence, which will be removed from the data. - Finally, the non-intrusive DNSMOS scores (OVRL, SIG, BAK)

https://github.com/microsoft/DNS-Challenge/blob/master/DNSMOS/dnsmos_local.py are calculated for each remaining speech sample. This allows us to filter out noisy and low-quality speech samples via thresholding each scorehttps://github.com/urgent-challenge/urgent2025_challenge/blob/main/utils/filter_via_dnsmos.py .

We finally curated a list of speech sample (~2500 hours) and noise samples (~500 hours) for Track1 based on the above procedure that will be used for simulating the training and validation data in the challenge.

Note that the data filtering is inperfect and the dataset still has non-ignorable amount of noisy samples. One of the goal of this challenge is to encourage participants to develop how to leverage (or filter out) such noisy data to improve the final SE performance.

Simulation

With the proviced scripts in the next section, the simulation data can be generated offline in two steps.

-

In the first step, a manifest

meta.tsvis firstly generated bysimulation/generate_data_param.pyfrom the given list of speech, noise, and room impulse response (RIR) samples. It specifies how each sample will be simulated, including the type of distortion to be applied, the speech/noise/RIR sample to be used, the signal-to-noise ratio (SNR), the random seed, and so on. -

In the second step, the simulation can be done in parallel via

simulation/simulate_data_from_param.pyfor different samples according to the manifest while ensuring reproducibility. This procedure can be used to generate training and validation datasets.

For the training set, we recommend dynamically generating degraded speech samples during training to increase the data diversity.

Distortions

In this challenge, the SE system has to address the following seven distortions. In addition to the first four distortions considered in our first challenge, we added three more distortions (bold ones) often observed in real recordings. Furthermore, in this challenge, inputs may have multiple distortions.

We provide an example simulation script as simulation/simulate_data_from_param.py.

- Additive noise

- Reverberation

- Clipping

- Bandwidth limitation

- Codec distortion

- Packet loss

- Wind noise

Simulation metadata

The manifest mentioned above is a tsv file containing several columns (separated by \t). For example:

| id | noisy_path | speech_uid | speech_sid | clean_path | noise_uid | snr_dB | rir_uid | augmentation | fs | length | text |

|---|---|---|---|---|---|---|---|---|---|---|---|

| unique ID | path to the generated degraded speech | utterance ID of clean speech | speaker ID of clean speech | path to the paired clean speech | utterance ID of noise | SNR in decibel | utterance ID of the RIR | augmentation type | sampling frequency | sample length | raw transcript |

| fileid_1 | simulation_validation/noisy/fileid_1.flac | p226_001_mic1 | vctk_p226 | simulation_validation/clean/fileid_1.flac | JC1gBY5vXHI | 16.106643714525433 | mediumroom-Room119-00056 | bandwidth_limitation-kaiser_fast->24000 | 48000 | 134338 | Please call Stella. |

| fileid_2 | simulation_validation/noisy/fileid_2.flac | p226_001_mic2 | vctk_p226 | simulation_validation/clean/fileid_2.flac | file205_039840000_loc32_day1 | 2.438365163611807 | none | bandwidth_limitation-kaiser_best->22050 | 48000 | 134338 | Please call Stella. |

| fileid_1561 | simulation_validation/noisy/fileid_1561.flac | p315_001_mic1 | vctk_p315 | simulation_validation/clean/fileid_1561.flac | AvbnjyrHq8M | 1.3502745341597029 | mediumroom-Room076-00093 | clipping(min=0.016037324066971528, |

48000 | 114829 | <not-available> |

Note

-

If the

rir_uidvalue is notnone, the specified RIR is applied to the clean speech sample. -

If the

augmentationvalue is notnone, the specified augmentation is applied to the degraded speech sample. -

<not-available>in thetextcolumn is a placeholder for transcripts that are not available. -

The audios in

noisy_path,clean_path, and (optional)noise_pathare consistently scaled such thatnoisy_path = clean_path + noise_path. -

However, the scale of the enhanced audio is not critical for objective evaluation the challenge, as the evaluation metrics are made largely insensitive to the scale. For subjective listening in the final phase, however, it is recommended that the participants properly scale the enhanced audios to facilitate a consistent evaluation.

-

For all different distortion types, the original sampling frequency of each clean speech sample is always preserved, i.e., the degraded speech sample also shares the same sampling frequency. For

bandwidth_limitationaugmentation, this means that the generated speech sample is resampled to the original sampling frequencyfs.