Baseline

Baseline code and checkpoint

Please refer to Baselines in ESPnet below for more details.

- Baseline script: https://github.com/kohei0209/espnet/tree/urgent2025/egs2/urgent25/enh1

- Baseline TF-GridNet pre-trained model https://huggingface.co/kohei0209/tfgridnet_urgent25/tree/main

Basic Framework

The basic framework is detailed in the URGENT challenge 2024 description paper

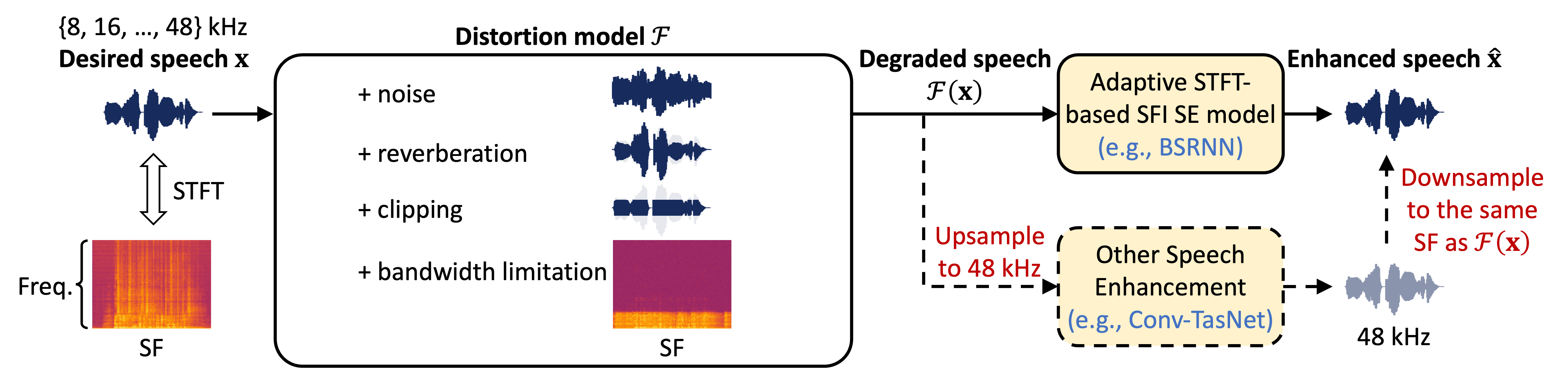

As depicted in the figure above, we design a distortion model (simulation stage)

During training and inference, the processing of different SFs is supported for both conventional SE models (lower-right) that usually only operate at one SF and adaptive STFT-based sampling-frequency-independent (SFI) SE models (upper-right) that can directly handle different SFs.

- For conventional SE models (e.g., Conv-TasNet

), we always upsample its input (degraded speech) to the highest SF (48 kHz) so that the model only need to operate at 48 kHz. The model output (48 kHz) is then downsampled to the same SF as the degraded speech. - For adaptive STFT-based SFI

SE models (e.g., BSRNN , TF-GridNet ), we directly feed the degraded speech of different SFs into the model, which can adaptively adjust their STFT/iSTFT configuration according to the SF and generate the enhanced signal with the same SF.

Baselines in ESPnet

We provide offical baselines and the corresponding recipe (egs2/urgent25/enh1) based on the ESPnet toolkit.

How to run training/inference is desribed in egs2/urgent25/enh1/README.md.

To install the ESPnet toolkit for model training, please follow the instructions at https://espnet.github.io/espnet/installation.html.

You can check “A quick tutorial on how to use ESPnet” to have a quick overview on how to use ESPnet for speech enhancement.

- For the basic usage of this toolkit, please refer to egs2/TEMPLATE/enh1/README.md.

- Several baseline models are provided in the format of a

YAMLconfiguration file inegs2/urgent25/enh1/conf/tuning/.- We will provide the pretrained TF-GridNet model using the provided config soon.

- To run a recipe, you basically only need to execute the following command after installing ESPnet from source:

For explanation of the arugments in./run.sh, please refer toegs2/TEMPLATE/enh1/enh.sh.