Data

Updates

❗️❗️[2025-05-16] The official evaluation datasets (validation, non-blind test, and blind test) have also been uploaded to Hugging Face. You can find them at https://huggingface.co/datasets/urgent-challenge/urgent2024_official. An additional speech quality assessment dataset with human-annotated mean opinion score (MOS) labels has also been released at https://huggingface.co/datasets/urgent-challenge/urgent2024_mos.

❗️❗️[2024-09-22] We have released the labels for the non-blind test dataset. You can download them from Google Drive.

❗️❗️[2024-09-19] We have released the official blind test dataset. This is the dataset that will be used for the final ranking. You can download it from Google Drive.

Note that unlike the non-blind test dataset, this dataset contains both simulated audios and real recordings

Their identities won't be revealed. . The former contains clean reference signals while the latter only has reference transcripts.So we will only calculate DNSMOS, NISQA, and WAcc metrics for the real recordings. For simulated data, all objective metrics listed in the Rules tab will be calculated.

After the leaderboard blind test phase ends (no further submissions accepted), we will additionally

- calculate the POLQA objective metric for the best submission of each team and insert it into the leaderboard as a part of the

Intrusive SE metriccategory.- obtain the MOS subjective score for the best submission of each team via crowd-sourcing based on the ITU-T P.808 standard

Due to our limited resource, we will subsample a fixed 300-sample subset from the full 1000-sample blind test set and use that for MOS evaluation of all teams' submissions. We will make sure the subset preserves as similar as possible the ranking structure of all teams as in the full blind test set. The utterance IDs of the subset will be released. . This score will be counted as a fifth category (Subjective SE metric) in addition to the four categories defined in the Rules tab.The final ranking will be obtained using the same methodology as defined in the Rules tab, but based on the five categories of metrics.

❗️❗️[2024-08-20] We have released the official non-blind test dataset. You can download it from Google Drive.

❗️❗️[2024-08-20] We have released the labels for the official validation dataset. You can download them from Google Drive.

❗️❗️[2024-06-20] We have released the official (complete) validation dataset. You can download it from Google Drive.

Note that this complete validation dataset is intended for assisting the development of challenge systems as well as for a fair comparison. The official validation leaderboard will only use a small portion

This subset can be downloaded from https://urgent-challenge.com/competitions/5#participate-get_data. of this dataset for evaluation.

Data description

The training and validation data are both simulated based on the following source data:

| Type | Corpus | Condition | Sampling Frequency (kHz) | Duration (after pre-processing) | License |

|---|---|---|---|---|---|

| Speech | LibriVox data from DNS5 challenge | Audiobook | 8~48 | ~350 h | CC BY 4.0 |

| LibriTTS reading speech | Audiobook | 8~24 | ~200 h | CC BY 4.0 | |

| CommonVoice 11.0 English portion | Crowd-sourced voices | 8~48 | ~550 h | CC0 | |

| VCTK reading speech | Newspaper, etc. | 48 | ~80 h | ODC-BY | |

| WSJ reading speech | WSJ news | 16 | ~85 h | LDC User Agreement | |

| Noise | Audioset+FreeSound noise in DNS5 challenge | Crowd-sourced + Youtube | 8~48 | ~180 h | CC BY 4.0 |

| WHAM! noise | 4 Urban environments | 48 | ~70 h | CC BY-NC 4.0 | |

| RIR | Simulated RIRs from DNS5 challenge | SLR28 | 48 | ~60k samples | CC BY 4.0 |

For participants who need access to the WSJ data, please reach out to the organizers (urgent.challenge@gmail.com) for a temporary license supported by LDC.

We also allow participants to simulate their own RIRs using existing tools

For example, RIR-Generator, pyroomacoustics, gpuRIR, and so on. for generating the training data. The participants can also propose publicly available real recorded RIRs to be included in the above data list during the grace period (SeeTimeline). Note: If participants used additional RIRs to train their model, the related information should be provided in the README.yaml file in the submission. Check the template for more information.

Pre-processing

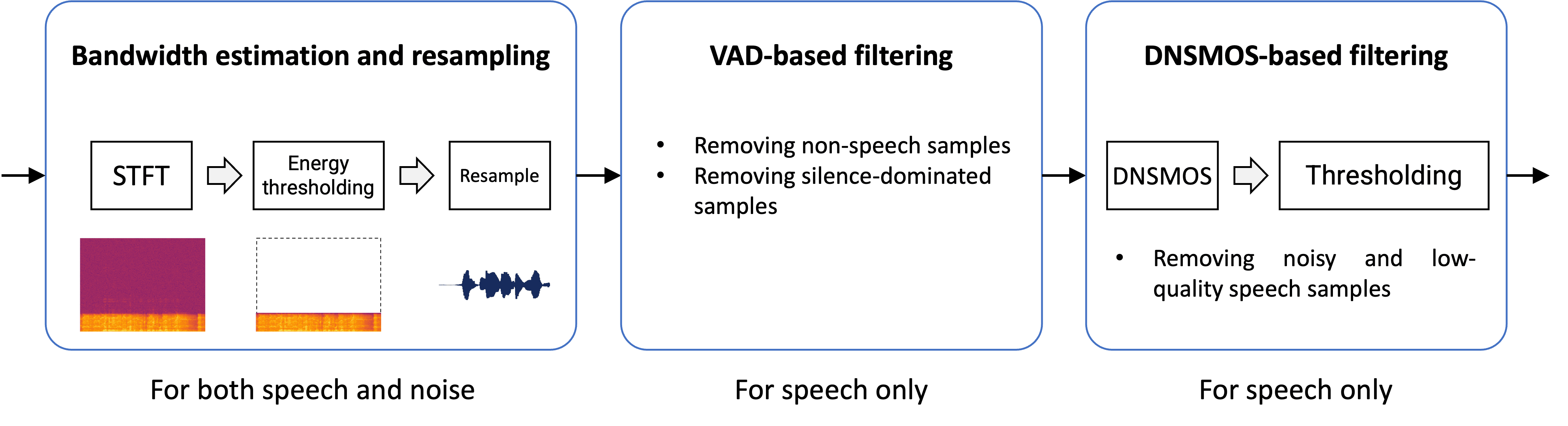

Before simulation, all collected speech and noise data are pre-processed to filter out low-quality samples and to detect the true sampling frequency (SF). The pre-processing procedure includes:

- We first estimate the effective bandwidth of each speech and noise sample based on the energy thresholding algorithm proposed in

https://github.com/urgent-challenge/urgent2024_challenge/blob/main/utils/estimate_audio_bandwidth.py . This is critical for our proposed method to successfully handle data with different SFs. Then, we resample each speech and noise sample accordingly to the best matching SF, which is defined as the lowest SF among {8, 16, 22.05, 24, 32, 44.1, 48} kHz that can fully cover the estimated effective bandwidth. - A voice activity detection (VAD) algorithm

https://github.com/urgent-challenge/urgent2024_challenge/blob/main/utils/filter_via_vad.py is further used to detect “bad” speech samples that are actually non-speech or mostly silence, which will be removed from the data. - Finally, the non-intrusive DNSMOS scores (OVRL, SIG, BAK)

https://github.com/microsoft/DNS-Challenge/blob/master/DNSMOS/dnsmos_local.py are calculated for each remaining speech sample. This allows us to filter out noisy and low-quality speech samples via thresholding each scorehttps://github.com/urgent-challenge/urgent2024_challenge/blob/main/utils/filter_via_dnsmos.py .

We finally curated a list of speech sample (~1300 hours) and noise samples (~250 hours) based on the above procedure that will be used for simulating the training and validation data in the challenge.

Simulation

With the proviced scripts in the next section, the simulation data can be generated offline in two steps.

-

In the first step, a manifest

meta.tsvis firstly generated bysimulation/generate_data_param.pyfrom the given list of speech, noise, and room impulse response (RIR) samples. It specifies how each sample will be simulated, including the type of distortion to be applied, the speech/noise/RIR sample to be used, the signal-to-noise ratio (SNR), the random seed, and so on. -

In the second step, the simulation can be done in parallel via

simulation/simulate_data_from_param.pyfor different samples according to the manifest while ensuring reproducibility. This procedure can be used to generate training and validation datasets.

For the training set, we recommend dynamically generating degraded speech samples during training to increase the data diversity.

Simulation metadata

The manifest mentioned above is a tsv file containing several columns (separated by \t). For example:

| id | noisy_path | speech_uid | speech_sid | clean_path | noise_uid | snr_dB | rir_uid | augmentation | fs | length | text |

|---|---|---|---|---|---|---|---|---|---|---|---|

| unique ID | path to the generated degraded speech | utterance ID of clean speech | speaker ID of clean speech | path to the paired clean speech | utterance ID of noise | SNR in decibel | utterance ID of the RIR | augmentation type | sampling frequency | sample length | raw transcript |

| fileid_1 | simulation_validation/noisy/fileid_1.flac | p226_001_mic1 | vctk_p226 | simulation_validation/clean/fileid_1.flac | JC1gBY5vXHI | 16.106643714525433 | mediumroom-Room119-00056 | bandwidth_limitation-kaiser_fast->24000 | 48000 | 134338 | Please call Stella. |

| fileid_2 | simulation_validation/noisy/fileid_2.flac | p226_001_mic2 | vctk_p226 | simulation_validation/clean/fileid_2.flac | file205_039840000_loc32_day1 | 2.438365163611807 | none | bandwidth_limitation-kaiser_best->22050 | 48000 | 134338 | Please call Stella. |

| fileid_1561 | simulation_validation/noisy/fileid_1561.flac | p315_001_mic1 | vctk_p315 | simulation_validation/clean/fileid_1561.flac | AvbnjyrHq8M | 1.3502745341597029 | mediumroom-Room076-00093 | clipping(min=0.016037324066971528, |

48000 | 114829 | <not-available> |

Note

-

If the

rir_uidvalue is notnone, the specified RIR is applied to the clean speech sample. -

If the

augmentationvalue is notnone, the specified augmentation is applied to the degraded speech sample. -

<not-available>in thetextcolumn is a placeholder for transcripts that are not available. -

The audios in

noisy_path,clean_path, and (optional)noise_pathare consistently scaled such thatnoisy_path = clean_path + noise_path. -

However, the scale of the enhanced audio is not critical for objective evaluation the challenge, as the evaluation metrics are made largely insensitive to the scale. For subjective listening in the final phase, however, it is recommended that the participants properly scale the enhanced audios to facilitate a consistent evaluation.

-

For all different distortion types, the original sampling frequency of each clean speech sample is always preserved, i.e., the degraded speech sample also shares the same sampling frequency. For

bandwidth_limitationaugmentation, this means that the generated speech sample is resampled to the original sampling frequencyfs.

Code

The data preparation and simulation scripts are available at https://github.com/urgent-challenge/urgent2024_challenge.